Introduction

When it comes to SEO (Search Engine Optimization), everyone focuses on the website’s structure, content, and backlinks. However, the small file robots.txt is also very important for SEO. The robots.txt file is the first file that search engine bots like Google, Bing, or Yahoo check when crawling your website. Therefore, creating this file properly can greatly improve the indexing, crawling, and SEO performance of your website.

What is Robots.txt?

Robots.txt is a simple text file placed in the root directory of a website (e.g. https://example.com/robots.txt). It is used to instruct search engine bots which pages to crawl and which pages to avoid.

For example:

User-agent: * Disallow: /private/ Allow: /public/

In this example, User-agent: * means that the rules apply to all bots. The instructions are to not crawl the /private/ folder and to crawl /public/ only.

This file mainly operates on two rules, Allow and Disallow. Sometimes Crawl-delay and Sitemap URLs are also given in it.

Its importance in SEO

- Guide to search engine bots

Without robots.txt, search engine bots can crawl all the pages they want on your website. If you have the right file, you can clearly tell the bots which pages are important and which ones to avoid. - Avoiding duplicate content

There are different versions of the same content on an e-commerce or news website. Such duplicate pages can be blocked using robots.txt, which improves SEO score. - Reducing server load

On large websites, bots repeatedly crawl all the pages. This causes unnecessary load on the server. The load can be reduced by crawl-delay or disallowing some pages. - Privacy and security

Some folders (like /admin/, /tmp/) should not be visible to search engines. You can protect user security by blocking them using robots.txt. - Mentioning the Sitemap

Providing a Sitemap URL in robots.txt helps search engines easily find all the pages on your website, which speeds up indexing.

Consequences of not having a proper robots.txt

- Indexing of unnecessary pages

If there is no robots.txt, unwanted pages like /search/, /tags/, /private/ may appear in the search results. This reduces the professional look of the website. - Decreased SEO performance

If duplicate content is crawled, the SEO score decreases and the main pages do not appear on the top. - Wasting crawl budget

Google has a limited “crawl budget” for large websites. If bots crawl unwanted pages, it delays the indexing of really important pages. - Security risk

If /admin/ or sensitive files are indexed, hackers can know the internal structure of the website. - Incorrect information appears in search engines

If there is an incorrect or incomplete robots.txt, some important pages may be blocked and they will never appear on Google.

What is a Robots.txt Generator Tool?

While running a website, it is important to control how search engines like Google, Bing crawl your site.

The file used for this is robots.txt.

👉 In simple terms, it is a small text file that tells search engine bots (also called web crawlers or spiders) which parts of your site to index and which to avoid.

For example:

- If you don’t want admin panel, private pages, testing URLs to appear in Google, you can Disallow them in robots.txt.

- By providing a sitemap URL, search engines help index your content quickly and correctly.

That is, robots.txt is an important file in SEO that controls the traffic to your site properly.

Manual vs Automatic Methods

1. Manual Method

- In this method, the developer himself creates a text file and writes rules like User-agent, Allow, Disallow, Crawl-delay, Sitemap in it.

- This file is uploaded to the root directory of the website under the name /robots.txt.

- But the problem is that it is not easy to understand all the rules correctly, write the syntax correctly and avoid mistakes in it.

- Even a small mistake can block the entire site from search engines.

2. Automatic Method (Generator Tool)

- Here you do not need to write rules manually.

- Just fill in a few fields – such as domain, user-agent, allow/disallow URLs, crawl delay, sitemap.

- The tool automatically creates a perfect robots.txt file.

- You can copy it or download it in a single click and place it on your website.

- This method is simple, fast and error-free.

How does our tool help?

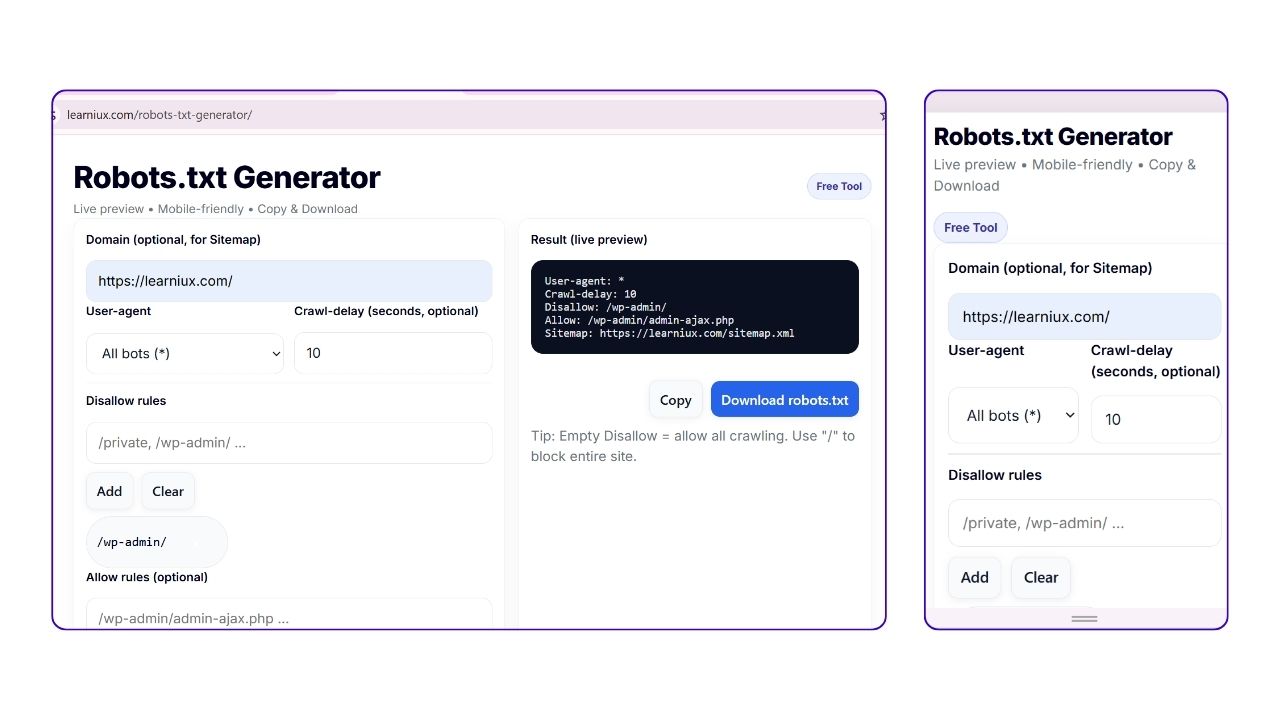

Our Robots.txt Generator Tool is specially designed in a user-friendly way for everyone from beginners to experts.

✅ Easy Interface – Create robots.txt according to your needs in just a few seconds.

✅ Ready-to-use Options – Predefined templates for platforms like WordPress, Blogger, Shopify.

✅ Error Free – No need to worry about incorrect syntax, the tool automatically creates the file in the correct format.

✅ Copy & Download – Copy or download in one click.

✅ SEO Friendly – Improves the visibility and ranking of your website due to proper crawling and indexing.

✅ Mobile & Desktop Friendly – Can be easily used on any device.

The biggest advantage is that using this tool you can create a professional robots.txt file without any technical knowledge. This will improve the SEO of your site and prevent unwanted pages from being indexed in search engines.

Boost your SEO with a perfect Robots.txt file

Don’t let search engines crawl the wrong pages on your site. Create a clean, error-free, and SEO-friendly robots.txt file in just a few clicks using our free Robots.txt generator tool.

Key features of our Robots.txt generator tool

When it comes to SEO optimization, one of the most overlooked but extremely important files on a website is the robots.txt file. This small text file plays a major role in guiding search engine crawlers on which parts of your website to index and which to keep hidden. Creating and managing this file manually can be confusing for beginners as well as time-consuming for professionals. That’s why we created our Robots.txt Generator tool, a user-friendly, mobile and desktop-compatible tool that is designed to make the process simple, accurate and efficient.

Below, let’s take a detailed look at the key features of this tool and why it is a must-have for every website owner, blogger or SEO professional.

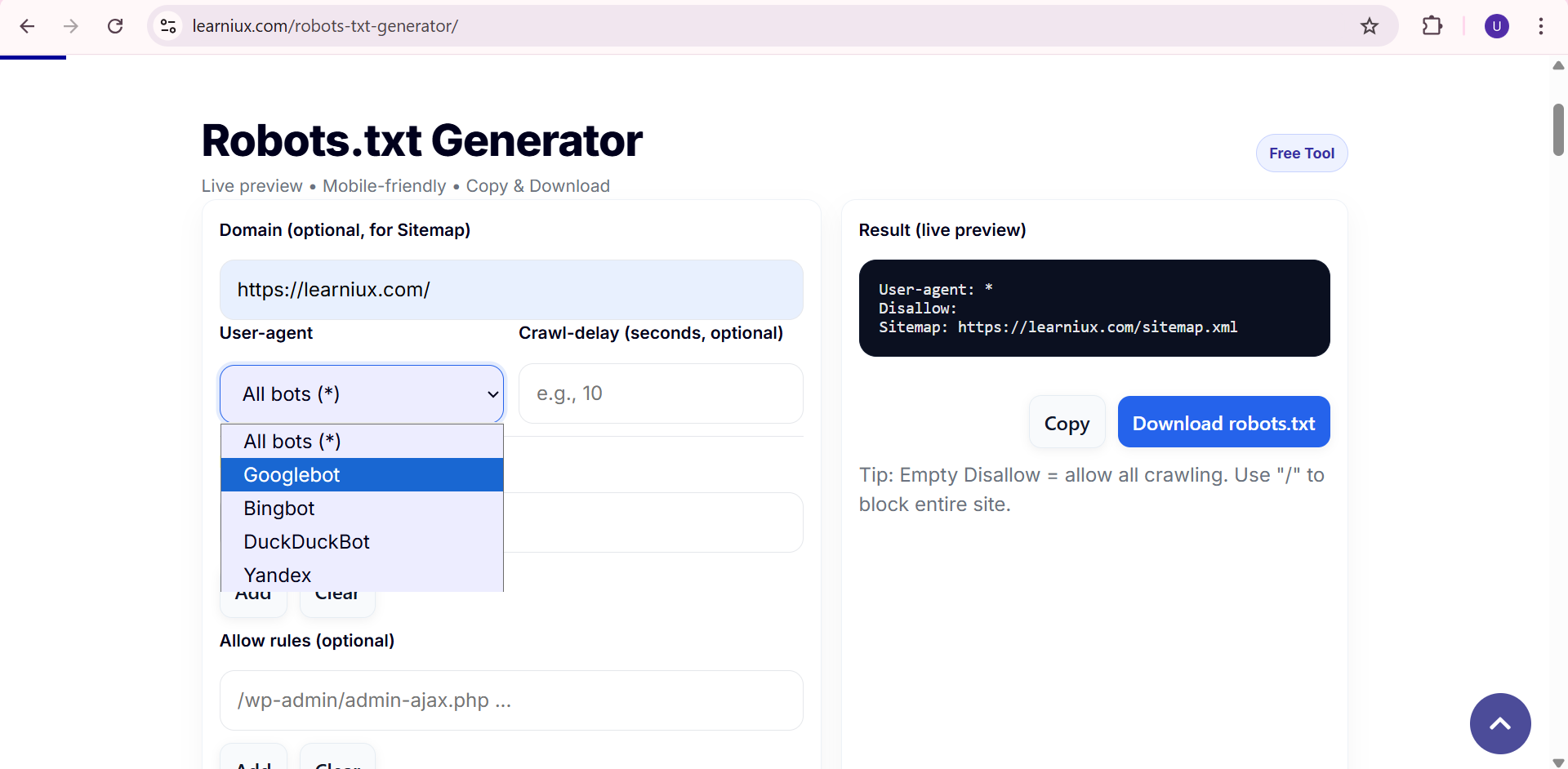

1. User-agent selection (Googlebot, Bingbot, all bots)

One of the most important sections of the robots.txt file is the user-agent directive. This line specifies which search engine crawlers the rules will apply to.

- Googlebot → This is Google’s web crawler, which is responsible for indexing your website for Google search results.

- Bingbot → Microsoft’s crawler, which handles indexing for Bing and Yahoo search engines.

- All bots (*) → With this option, you can define universal rules that apply to every search engine crawler that visits your site.

Our generator makes this selection incredibly easy. Instead of remembering the exact syntax or typing it in manually, you simply select the bot from the dropdown menu and the tool automatically generates the appropriate instructions.

2. Allow / Disallow Rules

You don’t need to index every page of your website. For example, you might want search engines to ignore admin pages, private files, or duplicate content. On the other hand, you might want them to index your most important pages.

With our tool, you can easily set:

- Allow Rules → Tell crawlers exactly which parts of your website they are allowed to access.

- Allow rules → Block crawlers from indexing private or unnecessary sections, such as /private/, /tmp/, or /cart/.

This feature ensures that search engines focus on content that is truly important for SEO, while avoiding irrelevant or sensitive pages.

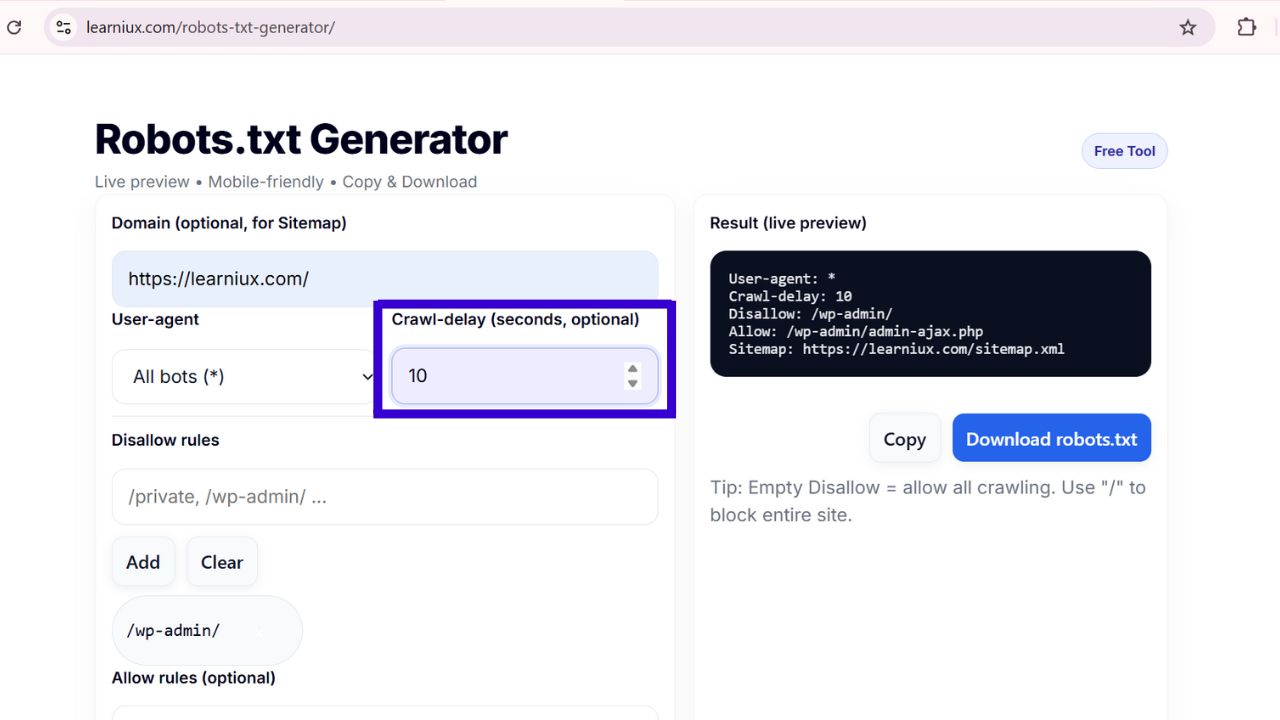

3. Crawl-delay setting

Some websites, especially smaller websites, can experience performance issues if search engine crawlers request too many pages at once. That’s where the crawl-delay directive comes in.

- By setting the crawl-delay (in seconds), you control how often bots can make requests.

- This helps reduce server load, ensures smooth performance, and prevents downtime caused by excessive crawling.

Our generator allows you to easily set this delay with a simple input field. Whether you want to slow bots down to 10 seconds per request or keep it instant, you’re in complete control.

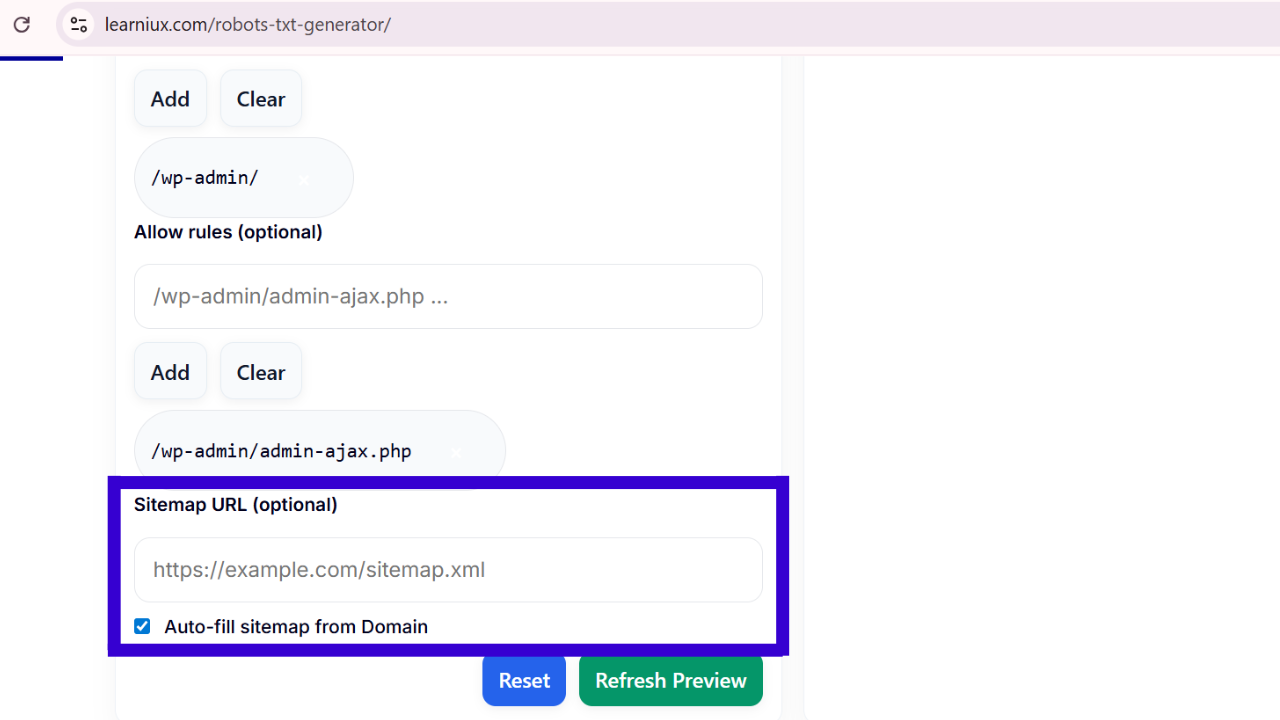

4. Sitemap Integration

Every optimized website must have a sitemap, which acts as a blueprint for search engines. By adding your sitemap URL to robots.txt, you make it easier for crawlers to find and index all of your important pages.

Our tool has a dedicated field where you can paste your sitemap URL (e.g., https://example.com/sitemap.xml). The generator then automatically inserts the appropriate line into your robots.txt file. This saves time and ensures that your website’s crawling process is more efficient.

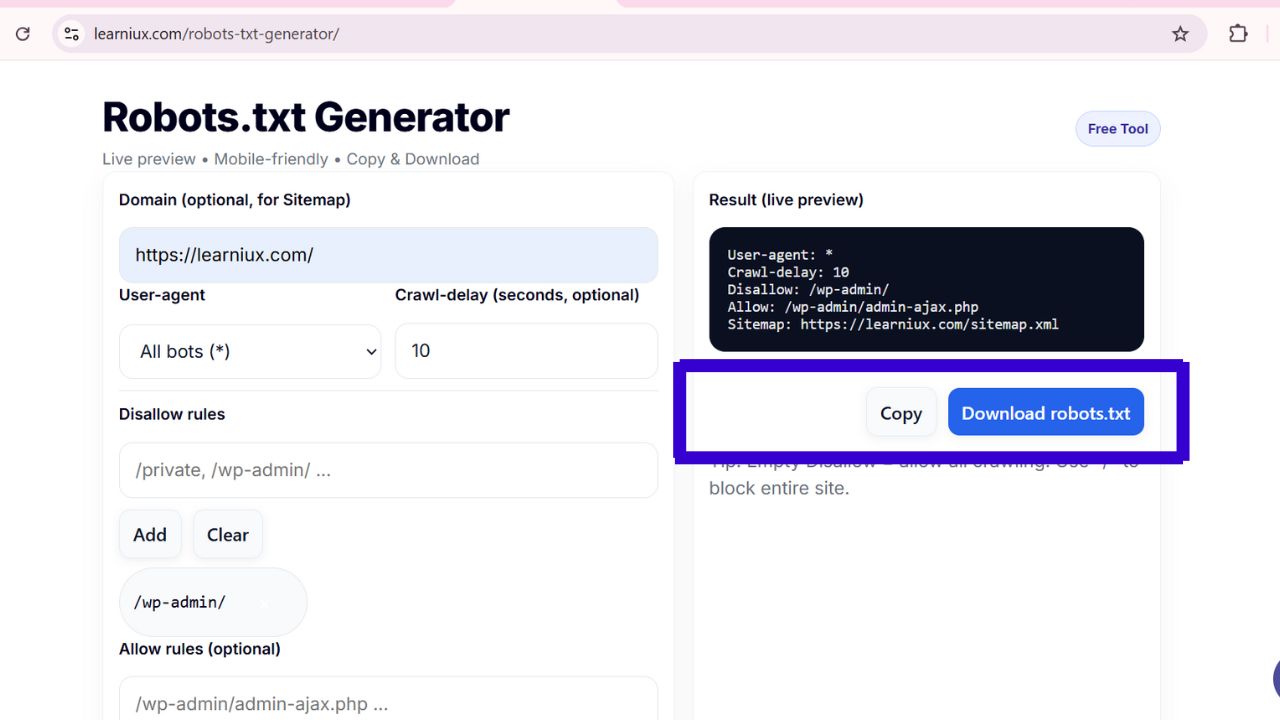

5. Copy and Download Options

Once your robots.txt file is created, you need an easy way to use it on your website. That’s why our tool includes:

- Copy Options → Instantly copy the generated file to your clipboard with one click.

- Download Options → Save the file directly as robots.txt so you can upload it to the root directory of your website.

No manual formatting, no extra effort – just generate, copy or download in seconds.

6. Mobile and desktop friendly design

In today’s world, people work from multiple devices. Whether you’re at your laptop in the office or checking SEO settings on your smartphone, our tool provides a seamless experience.

- The design is responsive, meaning it automatically adapts to any screen size.

- Large input fields, a clean layout and simple buttons ensure easy use.

- The live preview box makes it easy to see your robots.txt file in real-time before copying or downloading it.

This cross-device compatibility makes our generator practical for web developers, bloggers and SEO experts on the go.

A properly optimized robots.txt file is essential for improving SEO, controlling crawler behavior, and protecting sensitive website sections. Instead of manually writing code or risking errors, our Robots.txt generator tool makes the process simple and beginner-friendly.

With features like user-agent selection, allow/deny rules, crawl-delay, sitemap integration, and fast copy/download options, this tool is designed to save you time while ensuring professional-quality results. Plus, its mobile and desktop-friendly design means you can use it anytime, anywhere.

Whether you run a personal blog, e-commerce store, or corporate website, this tool helps you take better control of search engine crawling, giving your site the SEO advantage it deserves.

How to Use the Robots.txt Generator Tool (Step-by-Step Guide)

Robots.txt is an important file when it comes to Search Engine Optimization (SEO). This file tells search engine crawlers (Googlebot, Bingbot, etc.) which pages on your website to crawl and which ones not to. Incorrect robots.txt settings can damage your site’s SEO, while the right settings can help your website get indexed faster and more efficiently.

That’s why we’ve created the Robots.txt Generator Tool. No coding is required with this tool. Just follow a few steps and your ready-to-use robots.txt file will be created.

Let’s now see step-by-step how to use this tool.

1. Enter Domain (Optional)

In the first step, you need to enter the Domain URL of your website.

E.g. https://example.com

This is optional, but if you enter the domain, it will be easier when creating the Sitemap URL.

2. Select User-agent

Next you need to select User-agent. User-agent means the search engine bot.

The tool has three options:

- All Bots (*) → For all search engines

- Googlebot → For Google only

- Bingbot → For Bing only

If you want to set the settings for all bots, select All Bots.

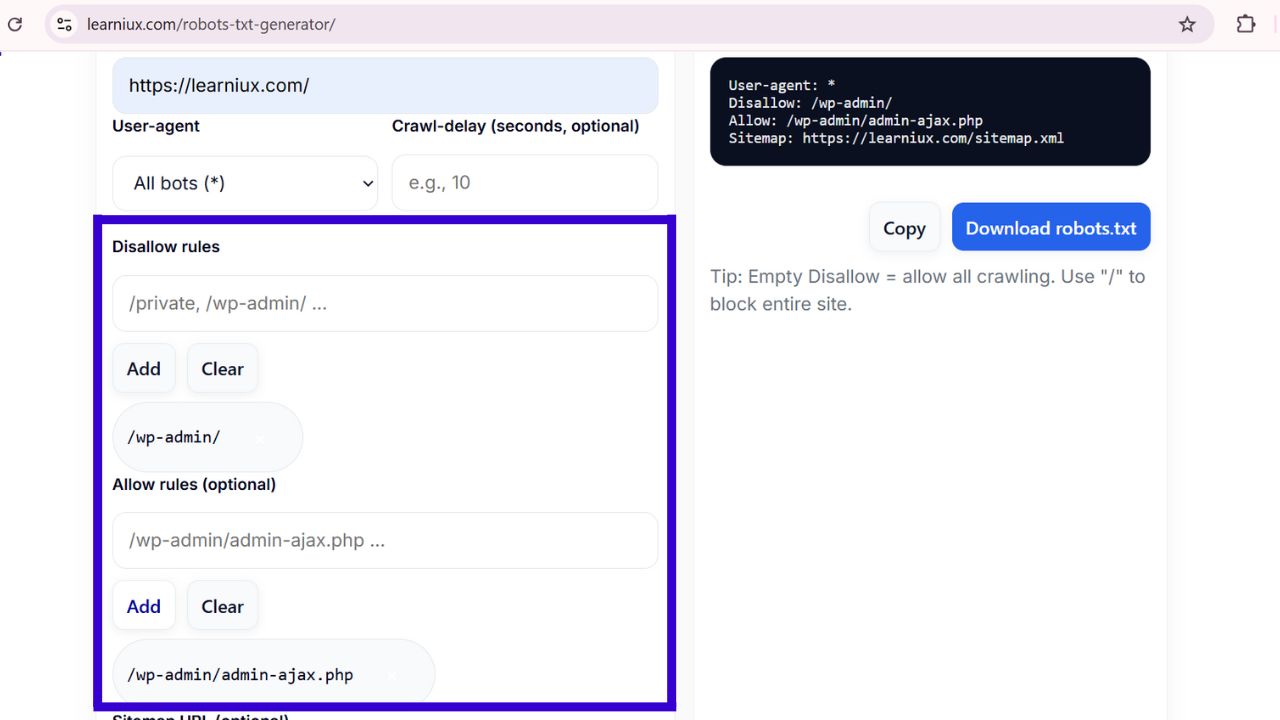

3. Add Allow/Disallow Path

This is a very important step for SEO.

- Allow Path → Pages that you want to allow search engines to crawl

- Disallow Path → Pages that you don’t want to crawl (e.g. /private, /admin)

Allow: /blog Disallow: /checkout

This will cause search engines to crawl your blog pages, but not your checkout pages.

4. Set Crawl-delay

Sometimes the website server slows down due to high bot traffic. Crawl-delay can be used for this.

For example, if you set Crawl-delay = 10, the bot will leave a gap of 10 seconds between each request.

5. Provide a Sitemap URL

It is very useful to have a Sitemap URL in your robots.txt file. This helps search engines to quickly find all the pages of your site.

For example:

Sitemap: https://example.com/sitemap.xml

You enter your site’s Sitemap URL in the field provided in the tool.

6. Click “Generate”

After filling in all the above information, click the Generate button.

This will create your robots.txt file in the Live Preview below.

7. Copy or Download

Once the file is created, you have two options:

- Copy → Copy the contents of the file to the clipboard

- Download → Download the file as robots.txt

You can then upload this file to the root directory of your website.

Benefits of this tool

- No coding required

- SEO-friendly robots.txt file is created quickly

- Easy to use on both mobile and desktop

- Easy to copy or download

The Robots.txt file is a basic but very important thing for SEO. Incorrect settings can hide your important pages from search engines, while the right settings can help your site rank faster.

Create this file in just a few seconds and optimize your website for SEO with the help of our Robots.txt Generator Tool.

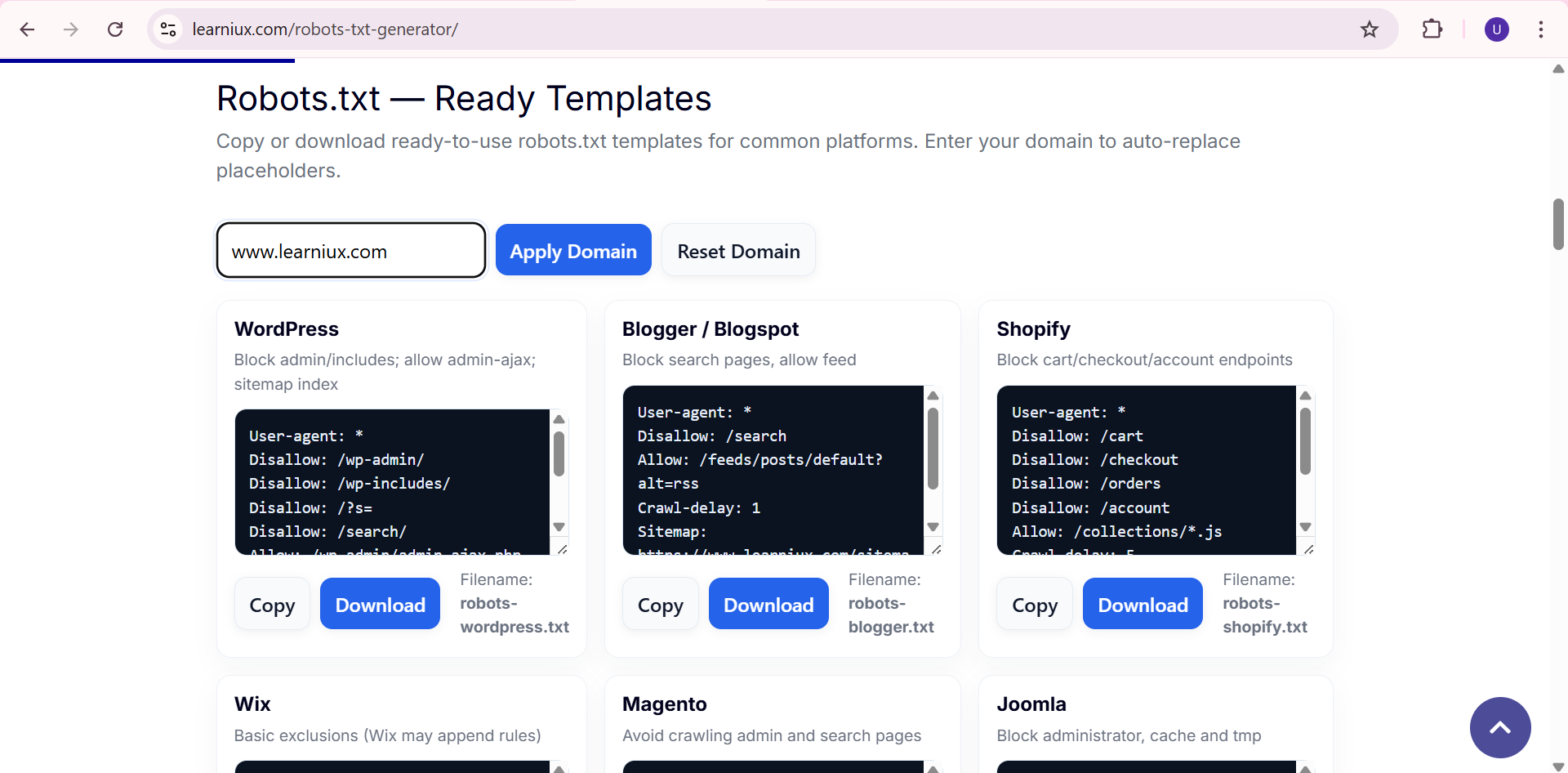

Ready-to-use Robots.txt examples for popular platforms

A properly optimized robots.txt file is a powerful tool for improving your website’s SEO and controlling how search engines crawl your content. Whether you’re running a WordPress blog, Blogger site, Shopify store, or a custom-built website, a proper robots.txt configuration ensures that only important and valuable pages are indexed, while duplicate or irrelevant pages are blocked.

Below, we’ll look at ready-to-use robots.txt examples for four popular platforms. You can copy and paste these examples directly into your website’s robots.txt file and then adjust them as needed.

1. Robots.txt for WordPress

WordPress websites often create unnecessary pages like /wp-admin/, /wp-includes/, search pages, and feed URLs. Allowing them to crawl wastes crawl budget and creates duplicate content issues.

✅ Optimized WordPress robots.txt Example

User-agent: *

Disallow: /wp-admin/

Disallow: /wp-includes/

Disallow: /xmlrpc.php

Disallow: /wp-content/plugins/

Disallow: /wp-content/cache/

Disallow: /?s=

Allow: /wp-admin/admin-ajax.php

Sitemap: https://example.com/sitemap_index.xml

👉 Why does this work?

- Blocks unnecessary admin and plugin folders.

- Prevents search query pages (?s=) from being indexed.

- Keeps sitemaps accessible to bots.

2. Robots.txt for Blogger

Blogger creates many duplicate pages like blog labels, search query pages, and feeds. To keep your content SEO-friendly, you should disallow them.

✅ Optimized Blogger robots.txt example

User-agent: *

Disallow: /search

Disallow: /?m=1

Disallow: /feeds

Allow: /

Sitemap: https://yourblog.blogspot.com/sitemap.xml

👉 Why does this work?

- Stops crawling of mobile versions (?m=1) which can create duplicate URLs.

- Prevents search and feeds from being indexed.

- Ensures that main content is crawlable.

3. Robots.txt for Shopify

Shopify automatically creates duplicate pages like /collections/ with parameters, filtered URLs, and checkout pages. You should block them to save crawl budget.

✅ Optimized Shopify robots.txt example

User-agent: *

Disallow: /cart

Disallow: /checkout

Disallow: /orders

Disallow: /search

Disallow: /collections/*sort_by*

Disallow: /collections/*?*

Allow: /

Sitemap: https://example.com/sitemap.xml

👉 Why does this work?

- Blocks checkout and cart related pages.

- Prevents duplicate collection URLs from being indexed with parameters.

- Ensures that product and main pages remain crawlable.

4. Robots.txt for Custom Websites

For custom-coded websites, robots.txt varies by design. A good starting point is to block admin areas and allow search engines to index the main content.

✅ Optimized custom robots.txt example

User-agent: *

Disallow: /admin/

Disallow: /login

Disallow: /register

Allow: /

Sitemap: https://example.com/sitemap.xml

👉 Why does this work?

- Blocks sensitive admin and login pages.

- Keeps all content pages accessible.

- Provides sitemaps to search engines for proper crawling.

Best practices for robots.txt

- Always include your sitemap URL.

- Never block essential resources like CSS or JS files.

- Test your robots.txt file with Google Search Console’s robots.txt tester.

- Keep it simple and updated as your site grows.

A well-organized robots.txt file helps search engines focus on your most valuable pages while avoiding duplicate content issues. Whether you’re running WordPress, Blogger, Shopify, or a custom site, you can get a solid foundation for your SEO by using the ready-to-use examples above.

👉 Start with these templates, customize them to your website’s needs, and regularly check Google Search Console for crawl errors.

With the right robots.txt setup, you can save crawl budget, improve site visibility, and strengthen your SEO strategy.

Why This Tool is Useful for SEO?

Search Engine Optimization (SEO) is an important process for every website. Without proper SEO, a website will not appear well on Google, Bing or other search engines. The role of robots.txt file is very important in SEO. It is a simple text file that tells search engine bots (crawlers) which pages to crawl and which not. A properly created robots.txt saves your crawl budget, reduces duplicate content, and gives good ranking signals to the website. That is why Robots.txt Generator Tool is very useful for SEO.

Let’s see its benefits in detail:

1. Crawl Budget Optimization

Search engines give each website a crawl budget. This means how many pages on your website the search engine bot will crawl in a session. This budget is very important for large websites. If the search engine bot frequently crawls pages that are not important for SEO (e.g. login pages, admin panel, filters, tags or archive pages), then the chances of crawling important pages are reduced.

With the help of Robots.txt Generator Tool, we can easily create Disallow rules. These rules tell search engines not to crawl certain specific URLs. So, instead of wasting the crawl budget, it is spent on important pages, such as homepage, product pages, services pages or blogs.

SEO Benefits:

- Fast indexing of important content

- Reduced server load

- Proper focus on ranking-worthy pages

2. Duplicate Content Control

Duplicate content is a problem that occurs on many websites. For example:

- www and non-www versions of the same page

- http and https versions

- Tags and category archives

- URLs with session IDs or query parameters

If search engine bots crawl all these versions, Google gets confused about which page is the main one. This can lead to a decrease in rankings.

With the help of the Robots.txt tool, you can block duplicate or unnecessary URLs. For example:

Disallow: /tag/

Disallow: /search/

Disallow: /?replytocom=

Such rules prevent crawlers from crawling duplicate pages and Google focuses on your canonical pages.

SEO Benefits:

- No keyword cannibalization

- Clean indexing

- Better authority for main URLs

3. Blocking Unnecessary Pages

There are some pages on a website that are not useful for search engines at all. For example:

- Admin or login pages (/wp-admin/, /admin/)

- Thank you pages

- Cart or checkout pages

- Private or test pages

If these pages appear in search engines, it spoils the user experience and has a negative impact on ranking signals.

Robots.txt Generator Tool allows you to easily block these pages. So that your website shows only relevant and SEO-friendly pages in search results.

SEO Benefits:

- Better user experience

- Higher click-through rate (CTR)

- No waste of crawl budget

4. Better Ranking Signals

Search engines use signals to determine how optimized a website is. If your site is crawling unnecessary pages, indexing duplicate content, or not crawling important pages, search engines are getting the wrong signals.

The Robots.txt tool allows you to declare your sitemap in a simple and organized way:

Sitemap: https://example.com/sitemap.xml

This helps search engines find and index your important URLs faster. With a well-organized crawl process, your website receives better SEO signals, which directly impacts rankings and organic traffic.

SEO Benefits:

- Improved keyword visibility

- Fast indexing of new content

- Higher domain authority

The Robots.txt Generator Tool is not just a tool, but a crucial part of your website’s SEO success. With it, you can:

- Optimize crawl budget

- Avoid duplicate content

- Block unnecessary pages

- Give clear ranking signals to search engines

A properly configured robots.txt file gives your website a strong presence in search engines and increases your organic traffic.

Conclusion

Proper Use of Robots.txt = SEO Success

In today’s digital age, every website owner is plagued by the same question – how can I bring my website to the top in search engines like Google? SEO (Search Engine Optimization) is the key to it. However, many people think that SEO means only keywords, backlinks or content optimization. But the truth is that a small file called Robots.txt also plays a huge role in achieving SEO success.

Robots.txt is a map that tells search engine robots (crawlers). This file tells them which pages to crawl on your website and which pages to avoid. Using Robots.txt correctly will index your website faster, avoid indexing unnecessary pages and use the Crawl Budget properly.

Why is Robots.txt necessary?

- Saves Crawl Budget – Every website gets a certain crawl budget from Google. Without Robots.txt, unnecessary pages get crawled and important pages get left behind.

- Stops Duplicate Content – Tag pages, search results pages, and other duplicate URLs don’t need to be shown to search engines. They can be blocked by Robots.txt.

- Protects Sensitive Information – You can prevent admin sections, private directories from appearing in public searches by disallowing them.

- Improves Website Speed - Avoiding unnecessary crawl requests reduces the load on the server.

Use Free Tool and Optimize Website

Many people find it difficult to write Robots.txt manually. Incorrect syntax can block the entire website from search engines. That’s why we have created Free Robots.txt Generator Tool for you.

👉 Benefits of this tool:

- User-Friendly Dashboard – No coding required, just select the option.

- Dynamic Rules – Set Allow / Disallow path easily.

- Sitemap Integration – Add your site’s sitemap easily.

- Multi-Bot Support – Create rules for Googlebot, Bingbot or all bots.

- One-Click Copy & Download – Use the created robots.txt immediately.

With the help of this tool, anyone, be it a brand new blogger or a professional SEO Expert, can create a perfect Robots.txt file in a matter of seconds.

Things to remember when using robots.txt

- Always include the main sitemap.xml in robots.txt.

- Allow only as many pages as necessary.

- Disallow unnecessary or duplicate URLs.

- Never write a rule like “Disallow: /” – this will block the entire site.

- Test in Google Search Console after updating the file.

Final Thoughts

SEO is an ongoing process. It doesn’t just focus on keywords. Crawl Budget, Index Management and Bot Control are equally important.

👉 The Robots.txt file, although small, is a game-changer for SEO Success.

Proper Robots.txt = Proper Indexing = More Organic Traffic

Have you created a proper Robots.txt for your website yet?

If not, don’t waste your time!

✅ Try our Free Robots.txt Generator Tool now and make your website SEO-friendly.

FAQs

What is Robots.txt and what is it used for?

Robots.txt is a simple text file that guides search engine bots. This file tells them which pages to crawl and which to avoid. Its main purpose is to use the Crawl Budget correctly. For example, if you do not want the admin section or private pages to be visible in search engines, you can block them using Robots.txt. This keeps sensitive information safe. Moreover, duplicate content pages can be prevented from being indexed. Using the Robots.txt file helps search engines focus only on important pages. This improves SEO performance. This file is essential for the indexing of the website in the right way.

What problems can arise if Robots.txt is not used correctly?

If the wrong rules are written in Robots.txt, serious problems can occur. For example, writing “Disallow: /” will block the entire website from search engines. In such a case, no pages will be indexed. Also, blocking the sitemap.xml file will deteriorate the crawling efficiency. Unnecessary disallow rules prevent important pages from being crawled. This reduces organic traffic to a large extent. Search engine bots get confused and create incorrect indexes. To identify such errors, it is necessary to test in Google Search Console. Although Robots.txt is a small file, its incorrect use can significantly reduce SEO performance. Therefore, using the correct syntax is very important.

What is Crawl Budget and how does Robots.txt help with it?

Crawl Budget is the limit on how many crawl requests a search engine makes for a website. Each website is given a certain budget. If that budget is spent on unnecessary pages, important pages cannot be crawled. For example, if tag pages or search results pages continue to be crawled, the main content pages will be ignored. You can disallow such pages through Robots.txt. This ensures that bots focus only on important pages. Using Crawl Budget correctly improves indexing speed. It has a positive effect on SEO rankings. Therefore, Robots.txt is very important for improving crawl efficiency.

What is the difference between Robots.txt and Meta Robots Tags?

Robots.txt file stops bots from crawling. That is, it does not allow them to come to the page. The meta robots tag is used in the HTML code which controls the indexing after crawling. For example, if Robots.txt blocks a page, Google will not crawl it. But if meta robots=”noindex” is used, the page will be crawled but will not be indexed. Therefore, both are used in different situations. Robots.txt is used to protect sensitive data. The meta robots tag is more useful to avoid duplicate content. A balanced use of both is necessary for proper SEO.

Why is it necessary to mention the sitemap in Robots.txt?

The sitemap file lists all the important pages on the website. If you mention the sitemap in Robots.txt, search engine bots can easily reach that sitemap. This makes the crawling process faster and more accurate. For example, if your site has more than 1000 pages, the sitemap shows the correct direction to the bots. Specifying sitemap.xml in Robots.txt improves index coverage. This is considered the best practice for search engines like Google and Bing. Moreover, it is a necessary step in technical SEO. Without a sitemap, bots can skip some important pages. Therefore, adding a sitemap to Robots.txt is mandatory.

Where should the Robots.txt file be placed?

The Robots.txt file should always be placed in the root directory of the website. For example, this file should be found at www.example.com/robots.txt. If it is placed in a sub-folder, search engines cannot recognize it. Bots always look for Robots.txt in the root directory. Therefore, it is necessary to have the correct permission on the server. If it is placed in the wrong place, crawling errors occur. Also, the file should be publicly accessible. It should be visible when the URL is entered in the web browser. If these things are taken care of, the file works properly.

How is the Robots.txt Generator Tool useful?

Creating robots.txt manually is difficult for many. One wrong rule can block the entire website. That is why the Robots.txt Generator Tool is very useful. In this, you only have to select the necessary options. Allow or Disallow paths can be easily added. Separate rules can be set for Googlebot, Bingbot or all bots. The option to add a sitemap is easy. Finally, the file can be copied or downloaded with a single click. So, even if you don’t have any coding knowledge, anyone can create a perfect file. This tool saves both time and effort. It is possible to create an SEO friendly Robots.txt in seconds.

Can an entire website be blocked using Robots.txt?

Yes, an entire website can be blocked using Robots.txt. If you write “User-agent: *” and “Disallow: /”, the website will be completely hidden from all bots. But this method should only be used in special cases. For example, when a development or testing site is live, it should not be visible in search engines. However, this rule is dangerous for live websites. In such a case, organic traffic will be completely stopped. Therefore, this rule should never be used for production websites. It is only useful for temporary experiments or private sites.

How to check whether Robots.txt is correct or not?

It is very important to check whether Robots.txt is working properly or not. For this, the “Robots.txt Tester” tool is available in Google Search Console. In it, you can check the crawling status by giving your URL. It understands whether the disallowed pages are being blocked and whether the allowed pages are being crawled. Apart from this, the robots.txt URL can be opened in the browser and the syntax can be checked. It is important to check whether the file is publicly accessible. The effect of Robots.txt is also visible in the crawl stats report. Regular checking can help you fix any errors quickly. This improves SEO performance.